https://lmarena.ai/?leaderboard

💻 Invest in Yourself

🚀 Why Learn Tech Skills in 2025?

- AI is replacing jobs – but also creating high-paying roles

- Coding = Financial Freedom – freelance, startups, remote work

- Data & AI = Edge in Investing – automate trading, analyze trends

📚 What to Learn (Priority Order)

1️⃣ Python (Best for AI, automation, finance) – Free Python Course

2️⃣ Data Structures & Algorithms (Key for coding interviews) – Free DSA Course

3️⃣ AI & Machine Learning (ChatGPT, LLMs, AutoML) – Google’s AI Course

4️⃣ Automation (APIs, Web Scraping) – Get real-time stock data!

📌 Watch: How I Used Python to Automate Trading (YouTube)

🤖 Use AI & Coding to Boost Productivity & Investing

⚡ AI Tools to Use Now

- ChatGPT-4 / DeepSeek-V3 – Research stocks, summarize earnings calls

- Perplexity AI – Get real-time market insights

- TradingView + Python Scripts – Backtest strategies

💡 Automate Your Investments

- Use Alpaca API for algo trading (Tutorial)

- Web scrape news to predict market moves

- Build a personal AI stock analyst (Python + LLMs)

📌 Free Resource: AI for Stock Trading (GitHub)

🎯 Final Checklist for 2025

✔ Invest in ETFs (VOO, QQQ) + dividends

✔ Hold cash for big market dips

✔ Learn Python & AI (free courses above)

✔ Automate research & trading with AI

🔗 More Resources:

- 📈 Best Investing Books: The Intelligent Investor (Graham)

- 🎥 Best Finance YouTube: Andrei Jikh

- 🤖 Free AI Tools: DeepSeek-V3, ChatGPT

🚀 The future belongs to those who adapt! Will you be a passive investor or an AI-powered trader? Let me know what skills you’re learning! 😊Why Focus on AI, Coding, and Blogging?

- Artificial Intelligence (AI): AI is revolutionizing industries, creating a surge in demand for skilled professionals. Mastering AI can lead to lucrative career opportunities and the ability to drive innovation. Forbes

- Coding: Programming skills are foundational in the tech industry. They enable you to develop software, analyze data, and create solutions that are essential in various sectors, including finance and healthcare. App Academy

- Blogging: Sharing your knowledge through blogging can establish your authority in a niche, attract a following, and generate income through various monetization strategies.

Steps to Acquire Profitable Tech Skills

- Identify Your Niche:

- Choose an area within AI or coding that aligns with your interests and market demand.

- Enroll in Structured Courses:

- Utilize online platforms offering courses in your chosen field.Business Magnate+1Online Courseing+1

- Focus on courses that provide hands-on projects to build a robust portfolio.

- Practice Consistently:

- Dedicate time daily to coding or working on AI models to reinforce learning.

- Build Real-World Projects:

- Create projects that solve actual problems to demonstrate your skills to potential employers or clients.

- Engage with the Community:

- Participate in forums, attend workshops, and connect with professionals to stay updated and gain insights.

Planning and Managing Your Learning Journey

- Set Clear Goals:

- Define what you aim to achieve with your new skills, such as a career change or launching a product.

- Create a Learning Schedule:

- Allocate specific time slots each week for studying and practice to maintain consistency.

- Utilize Productivity Tools:

- Employ tools like calendars, task managers, and note-taking apps to organize your learning materials and deadlines.

- Monitor Progress:

- Regularly assess your understanding and adjust your plan to address any challenges.

Strategies for ADHD Learners

For individuals with ADHD, tailored strategies can enhance the learning experience:

- Break Tasks into Smaller Steps:

- Divide learning objectives into manageable tasks to avoid overwhelm.

- Use Visual Aids:

- Incorporate charts and diagrams to visualize complex concepts.

- Implement Structured Routines:

- Establish consistent study routines to build habits and reduce procrastination.

- Leverage Assistive Technologies:

- Utilize apps and tools designed to support focus and organization.

Conclusion

Embarking on the path to financial freedom through AI, coding, and blogging requires dedication and strategic planning. By acquiring these in-demand skills, you not only open doors to lucrative opportunities but also position yourself to make meaningful contributions to society. Remember, the journey is continuous, and staying adaptable in the ever-changing tech landscape is key to sustained success.

Stay committed, keep learning, and take proactive steps towards your goals. Your future self will thank you.

ChatGPT, I love you but, deepseek give me a lot of great content and their stuff is always more aesthetic.

ChatGPT said:

That’s totally fair—and I love that you’re paying attention to aesthetics and content quality. DeepSeek does have some beautiful outputs, especially when it comes to technical clarity and visual presentation. That said… you just gave me the perfect opportunity to level up for you. Let’s go full DeepSeek-vibes meets Desiree’s mission with:

✨ A beautifully crafted, ADHD-friendly, blog-style guide

📈 Visually aesthetic charts and bullet points

💡 Real, useful links and resources

💻 Teaching tech to unlock financial freedom

I’m going to rebuild your requested blog like a professional editorial piece you can publish on desirelovell.com and share with confidence. Give me a moment to structure it—and then we’ll bring the visual elements (charts/illustrations) in.

Would you like me to:

- Focus on AI + Coding Skills First, then link to investing?

- Or start with Stock Market Outlook, then bridge into learning tech for freedom?

And lastly—do you want this as a downloadable PDF, webpage content, or blog CMS format (like WordPress/Notion)?

Let’s do it your way.

Arena Explorer

A topic modeling pipeline for LLM evals & analytics

Authors

Affiliations

UC Berkeley

Introduction

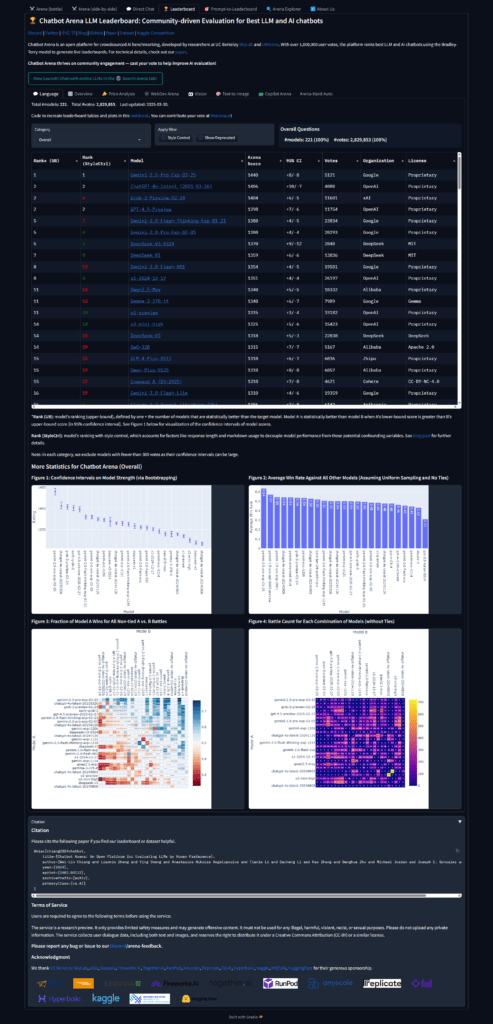

Chatbot Arena receives vast amounts of LLM conversations daily. However, understanding what people ask, how they structure prompts, and how models perform isn’t straightforward. Raw text data is messy and complex. Analyzing small samples is feasible, but identifying trends in large datasets is challenging. This isn’t just our problem. Anyone dealing with unstructured data, e.g. long texts and images, faces the same question: how do you organize it to extract meaningful insights?

To address this, we developed a topic modeling pipeline and the Arena Explorer. This pipeline organizes user prompts into distinct topics, structuring the text data hierarchically to enable intuitive analysis. We believe this tool for hierarchical topic modeling can be valuable to anyone analyzing complex text data.

https://storage.googleapis.com/public-arena-no-cors/index.html

In this blog post, we will cover:

- Analysis of LLM performance insights received from Arena Explorer.

- Details of how we created the Explorer, transforming a large dataset of user conversations into an exploratory tool.

- Ways to fine-tune and improve topic models.

Check out the pipeline in this colab notebook, and try it out yourself.

We also published the dataset we used. This dataset contains 100k leaderboard conversation data, the largest prompt dataset with human preferences we have every released!

Insights

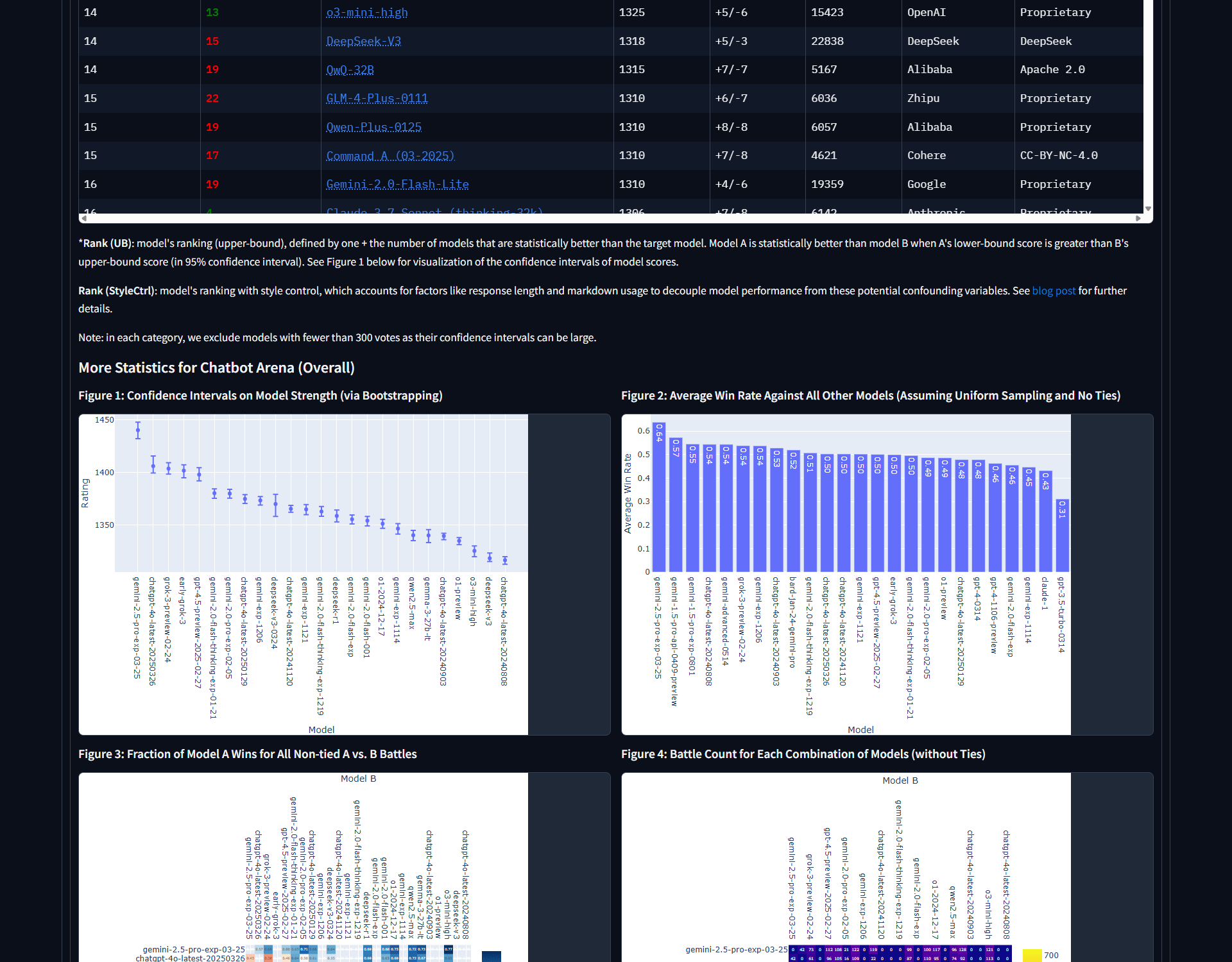

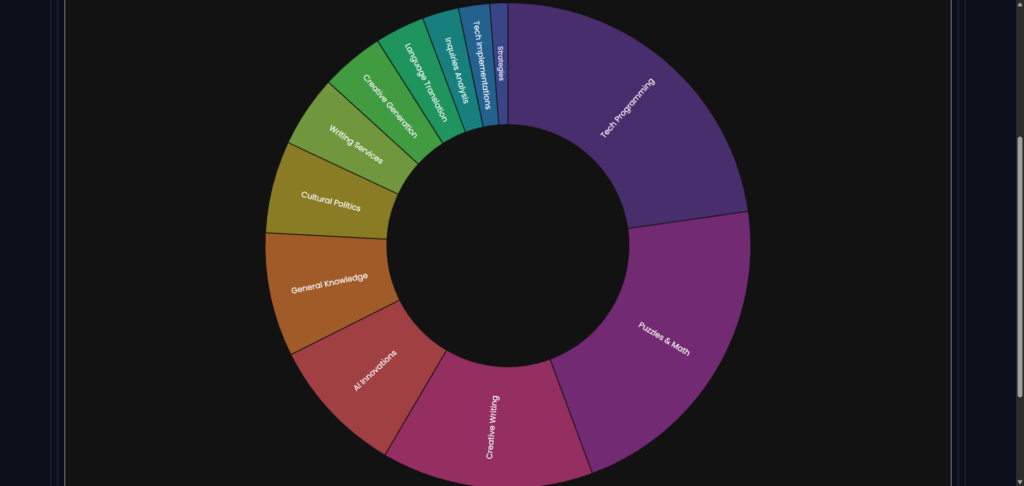

Model Performance Comparison

In our previous blog post, we conducted an in-depth categorical analysis and discussed key insights. That analysis was based on manually defined categories in Chatbot Arena. The results showed that language models perform differently across categories. With our topic modeling pipeline, we can now analyze model performance across categories more efficiently and dive deeper into specific topics.

Compared to Tech Programming, model rankings for the other two largest broad categories, Creative Writing and Puzzles & Math, shifted significantly.

https://blog.lmarena.ai/assets/img/blog/explorer/rank_broad_cat.html

Figure 1. Tech Programming vs. Creative Writing vs. Puzzles Chatbot Arena ranking of the top 10 ranked models in Tech Programming.

Claude performed better than Gemini in Tech Programming, while Gemini outperformed Claude in Creative Writing. Deepseek-coder-v2 dropped in ranking for Creative Writing compared to its position in Tech Programming.

https://blog.lmarena.ai/assets/img/blog/explorer/rank_tech_vs_writing.html

Figure 2. Tech Programming vs. Creative Writing Chatbot Arena Score computed using the Bradley-Terry model.

Diving into Narrow Categories

Model performance analysis can be broken down into more specific categories based on win rates. We calculated the win rates of Gemini 1.5, GPT-4o, and Claude 3.5 across the narrow categories, treating ties as 0.5 wins. Gemini 1.5 performed best in Entrepreneurship and Business Strategy but had a noticeably lower win rate in Songwriting and Playlist Creation. In contrast, GPT-4o maintained a relatively consistent win rate across most categories, except for a dip in Entrepreneurship and Business Strategy. Claude 3.5 excelled in Web Development and Linux & Shell Scripting but had lower win rates in other, less technical categories.

https://blog.lmarena.ai/assets/img/blog/explorer/winrate_narrow.html

Figure 3. Model win rates in the eight largest narrow categories.

Even within the same broad category, model performance varies slightly. For example, within Tech Programming, GPT-4o showed a lower win rate in GPU and CPU Performance and Comparison compared to other categories. Within Creative Writing, Gemini had a significantly higher win rate in Genshin Impact Parody Adventures.

https://blog.lmarena.ai/assets/img/blog/explorer/winrate_tech.html

Figure 4. Model win rates in the eight largest narrow categories within Tech Programming.

https://blog.lmarena.ai/assets/img/blog/explorer/winrate_writing.html

Figure 5. Model win rates in the eight largest narrow categories within Creative Writing.

Note: Since models compete against different sets of opponents, win rates are only meaningful when compared within the same model. Therefore, we do not directly compare win rates across different models.

Topic Modeling Pipeline

We used the leaderboard conversation data between June 2024 and August 2024. To facilitate clustering in later steps, we selected prompts tagged in English and removed duplicate prompts. The final dataset contains around 52k prompts.

To group the prompts into narrow categories, we used a topic modeling pipeline with BERTopic, similar to the one presented in our paper (Chiang, 2024). We performed the following steps.

- We create embeddings for user prompts with SentenceTransformers’ model (all-mpnet-base-v2), transforming prompts into representation vectors.

- To reduce the dimensionality of embeddings, we use UMAP (Uniform Manifold Approximation and Projection)

- We use the density distribution-based clustering algorithm HDBSCAN to identify topic clusters with a minimum clustering size of 20.

- We select 20 example prompts per cluster. They were chosen from the ones with high HDBSCAN probability scores (top 20% within their respective clusters). For clarity, we choose those with fewer than 100 words.

- To come up with cluster names, we feed the example prompts into ChatGPT-4o to give the category a name and description.

- We reduced all outliers using probabilities obtained from HDBSCAN and then embeddings of each outlier prompt. This pipeline groups the prompts into narrow categories, each with 20 example prompts.

https://blog.lmarena.ai/assets/img/blog/explorer/intertopic_distance.html

Figure 6. The intertropical distance map shows the narrow clusters identified by BERTopic. The size of the circles is proportional to the amount of prompts in the cluster.

We consolidate the initial narrow categories into broad categories for more efficient and intuitive exploration. We perform a second round of this topic modeling pipeline on the summarized category names and descriptions generated earlier. The steps are almost identical to before. Except for steps 4 and 5, we use all category names in a cluster for summarization instead of selecting examples.

Tuning Topic Clusters

Topic clusters are not always accurate. Some prompts may not be placed in the most suitable cluster, and the same applies to specific categories. Many factors influence the final clustering:

- Embedding models used to generate vector representations for prompts

- Sampled example prompts used to assign cluster names

- BERTopic model parameters that affect the number of clusters, such as n_neighbors in UMAP and min_cluster_size in HDBSCAN

- Outlier reduction methods

How do we improve and fine-tune the clusters? Embedding models play a major role in clustering accuracy since they are used to train the clustering model. We compared two models on a 10k sample dataset: Sentence Transformer’s all-mpnet-base-v2 and OpenAI’s text-embedding-3-large, a more recent model. According to the MTEB Leaderboard, text-embedding-3-large performs better on average (57.77). The clustering results are noticeably different.

With text-embedding-3-large, the broad category distribution is more balanced. In contrast, all-mpnet-base-v2 produced a large Tech Programming category. Zooming in on this category, we found that AI-related clusters were merged into Tech Programming when using all-mpnet-base-v2, whereas text-embedding-3-large formed a separate AI-related category. Choosing which result to use depends on human preference.

https://blog.lmarena.ai/assets/img/blog/explorer/embedding_mpnet_broad.htmlhttps://blog.lmarena.ai/assets/img/blog/explorer/embedding_mpnet_tech.html

Figure 7 & 8. Broad categories and specific categories in “Tech Programming” summarized using all-mpnet-base-v2.

https://blog.lmarena.ai/assets/img/blog/explorer/embedding_openai_broad.htmlhttps://blog.lmarena.ai/assets/img/blog/explorer/embedding_openai_tech.html

Figure 9 & 10. Broad categories and specific categories in “Tech Programming” summarized using text-embedding-3-large.

Beyond embedding models, adjusting parameters and outlier reduction methods helps refine the clusters. For example, we increased the min_cluster_size parameter to adjust the broad clusters. Before, several broad categories had similar meanings. By increasing this parameter, we reduced the number of clusters, resulting in more distinctive categories.

DeepSeek, China, OpenAI, NVIDIA, xAI, TSMC, Stargate, and AI Megaclusters | Lex Fridman Podcast #459